Seeing Like a Data Structure

Schneier on Security

JUNE 3, 2024

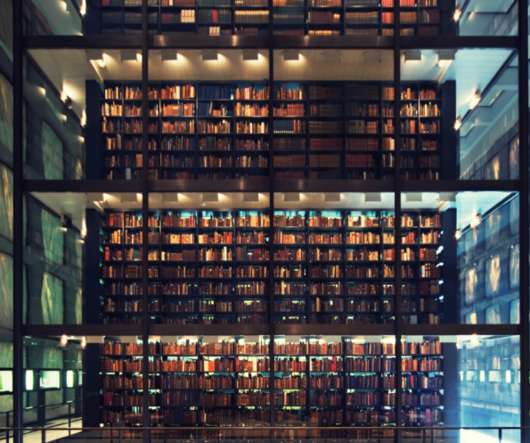

We are about to find out, as we begin to see the world through the lens of data structures. This is what life is like when we see the world the way a data structure sees the world. Nearly every university’s curriculum immediately introduces these students to data structures.

Let's personalize your content