One of the most influential books published in the last decade was Nudge: Improving Decisions About Health, Wealth and Happiness by Richard Thaler and Cass Sunstein. In it, the authors set about showing how groundbreaking research into decision-making by Daniel Kahneman and Amos Tversky decades earlier (for which Kahneman later won a Nobel prize) could be applied to public policy. The point of the book, as one psychologist puts it, is that “people are often not the best judges of what will serve their interests, and that institutions, including government, can help people do better for themselves (and the rest of us) with small changes – nudges – in the structure of the choices people face”.

Nudge proved very popular with policymakers because it suggested a strategy for encouraging citizens to make intelligent choices without overtly telling them what to do. Thaler and Sunstein argued that if people are left to their own devices they often make unwise choices and that these mistakes can be mitigated if governments or organisations take an active role in framing those choices. A classic example is trying to ensure that employees have a pension plan. Their employer can encourage them to choose a plan by emphasising the importance of having one. Or they can frame the decision by having every employee enrolled in the company plan by default, with the option of opting out and choosing their own scheme.

Thaler and Sunstein describe their philosophy as “libertarian paternalism”. What it involves is a design approach known as “choice architecture” and in particular controlling the default settings at any point where a person has to make a decision.

Funnily enough, this is something that the tech industry has known for decades. In the mid-1990s, for example, Microsoft – which had belatedly realised the significance of the web – set out to destroy Netscape, the first company to create a proper web browser. Microsoft did this by installing its own browser – Internet Explorer – on every copy of the Windows operating system. Users were free to install Netscape, of course, but Microsoft relied on the fact that very few people ever change default settings. For this abuse of its monopoly power, Microsoft was landed with an antitrust suit that nearly resulted in its breakup. But it did succeed in destroying Netscape.

When the EU introduced its General Data Protection Regulation (GDPR) – which seeks to give internet users significant control over uses of their personal data – many of us wondered how data-vampires like Google and Facebook would deal with the implicit threat to their core businesses. Now that the regulation is in force, we’re beginning to find out: they’re using choice architecture to make it as difficult as possible for users to do what is best for them while making it easy to do what is good for the companies.

We know this courtesy of a very useful 43-page report just out from the Norwegian Consumer Council, an organisation funded by the Norwegian government. The researchers examined recent – GDPR-inspired – updates to user settings by Google, Facebook and Windows 10. What they found was that default settings and “dark patterns” (techniques and features of interface design meant to manipulate users) are being deployed to nudge users towards privacy-intrusive options. The findings include “privacy-intrusive default settings, misleading wording, giving users an illusion of control, hiding away privacy-friendly choices, take-it-or-leave-it choices, and choice architectures where choosing the privacy-friendly option requires more effort for the users”.

The researchers found that both Facebook and Google in particular have privacy-intrusive defaults – “where users who want the privacy friendly option have to go through a significantly longer process. They even obscure some of these settings so that the user cannot know that the more privacy-intrusive option was preselected.”

Popup alerts from Facebook, Google and Windows 10 turn out to have design, symbols and wording that nudge users away from the privacy-friendly choices. Choices are worded “to compel users to make certain choices, while key information is omitted or downplayed. None of them lets the user freely postpone decisions. Also, Facebook and Google threaten users with loss of functionality or deletion of the user account if the user does not choose the privacy-intrusive option”.

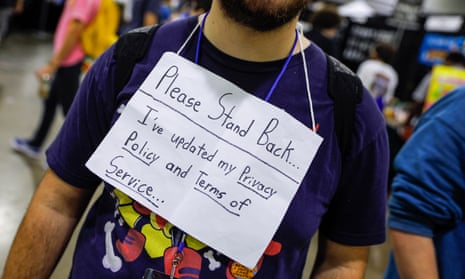

I could go on, but you will get the point. Thaler and Sunstein might be impressed by the architecture of these choices were it not for the fact that they are designed to make perverse rather than socially responsible outcomes more likely. At the root of it all, of course, is the warped business model of surveillance capitalism. So to expect the companies to behave differently would be about as realistic as expecting Dracula to request that his guests sign a consent form before sitting down to dinner.

What I’m reading

‘I was devastated’

The inventor of the web, Tim Berners-Lee, is dismayed at how his creation has been warped by corporations and ideologies – and is trying to fix it. He’s written a fascinating Vanity Fair article about his software project.

The Age of Flux

Peter Pomerantsev argues on the American Interest website that if you want to understand what’s happening to the west, you should look to Russia, because it happened there earlier. Best read in conjunction with Tom Friedman’s New York Times essay “Why are so many political parties blowing up?”