We all fear our smartphones spy on us, and I’m subject to a new type of surveillance. An app called TapCounter records each time I touch my phone’s screen. My swipes and jabs are averaging about 1,000 a day, though I notice that’s falling as I steer shy of social media to meet my deadline. The European company behind it, QuantActions, promises that through capturing and analysing the data it will be able to “detect important indicators related to mental/neurological health”.

Arko Ghosh is the company’s cofounder and a neuroscientist at Leiden University in the Netherlands. “Tappigraphy patterns” – the time series of my touches – can, he says, confidently be used not only to infer slumber habits (tapping in the wee hours means you are not sleeping) but also mental performance level (the small intervals in a series of key-presses represent a proxy for reaction time), and he has published work to support it.

QuantActions plans to launch features based on those insights next year. But further into the future, Ghosh would like to use the technology for medical reasons, including to predict seizures in people with epilepsy. This year Ghosh published a small clinical study of people with epilepsy that shows how subtle changes in smartphone tappigraphy alone could be used to infer abnormalities in their brainwaves. “Our hope is that some day we can forecast upcoming episodes,” says Ghosh.

Ghosh’s work and my taps are part of the new but rapidly developing field called digital phenotyping. It aims to take the huge amounts of raw data that can be continuously collected from people’s use of smartphones, wearables and other digital devices and analyse them using artificial intelligence (AI) to infer behaviour related to health and disease.

If symptom-related digital signals – called digital biomarkers – can be properly teased out, it could provide a new route for diagnosing or monitoring a range of medical conditions, particularly those relating to mental or brain health. Early research suggests patterns in geolocation data may correlate with episodes of depression and relapses in schizophrenia; certain keystroke patterns could predict mania in bipolar disorder; and the way toddlers gaze at a smartphone screen could be used to detect autism.

Data streams include smartphone activity logs, measurements from any of a phone’s built-in sensors (such as the GPS, accelerometer or light sensor) as well as user-generated content, which can be mined for words or phrases. “It is classic big-data analytics… repurposing data for reasons other than it was primarily collected,” says Brit Davidson, an assistant professor of analytics at the University of Bath, UK who has been critically watching the field develop.

The technology is attracting big tech companies’ interest. In September, the Wall Street Journal reported that Apple is working on iPhone features to help diagnose depression and cognitive decline. Others, such as Google, are also reportedly interested. Apple is probably hoping it is going to be able to correlate various phone indices with other indices it considers show mental health disorders, says Helen Christensen, a professor of mental health at the University of New South Wales in Australia who also leads the nonprofit Black Dog Institute, focused on the diagnosis, treatment and prevention of mood disorders.

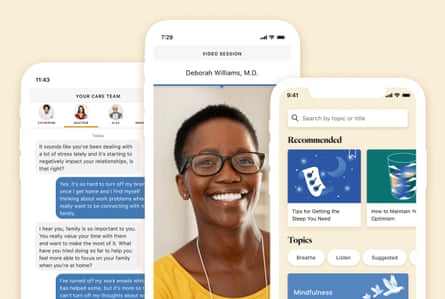

Meanwhile, Silicon Valley-based consumer health and wellness startups are already incorporating aspects of the technology into their products, albeit not yet for clinical diagnosis. Mindstrong provides therapy and psychiatry services virtually and has received tens of millions in funding, including from Jeff Bezos’s venture capital firm. It claims to use patented technology to track how clients tap, scroll and click on their phones so its clinicians can provide “more personalised care”. Though it isn’t described on its website, a spokesperson for Ginger, which offers on-demand mental health support, confirmed the company uses a “relatively rudimentary form” of digital phenotyping algorithm to analyse text conversations between users and its coaches to provide insights. Transparency about what some companies are doing can be lacking, notes Nicole Martinez-Martin, a bioethicist at Stanford University in California who focuses on digital health technology.

Traditionally, diagnosing mental illness has relied on self-reported experiences and medical assessment conducted at a clinic. But it captures just one point in time and can be highly subjective. Digital phenotyping offers the possibility of collecting continuous behavioural data capturing a person’s lived experiences. “It could give us a more accurate way to diagnose people,” says Jukka-Pekka Onnela, a biostatistician at Harvard University, Massachusetts, who has helped pioneer digital phenotyping and has a number of ongoing projects in the area including with industry (Onnela is also part of the leadership team of a large research collaboration with Apple to study women’s health including by tracking menstrual cycles via the iPhone).

It could also – in a world where mental health problems are increasing and services are stretched – make things cheaper, quicker and more efficient. People could manage their own mental health better and those who aren’t aware they have a problem could be alerted. “It is worth investigating,” says Christensen. “If we can find that this data is relevant… it would be a big breakthrough.”

Cogito Companion illustrates how the technology might one day be used. It is an experimental decision support tool intended for use by clinicians to help diagnose mood and anxiety disorders. Developed by the Boston-based startup Cogito with funding from the Pentagon’s research agency, the Defense Advanced Research Projects Agency (Darpa), the company is working towards being able to use it medically for veterans and military personnel and it is currently being clinically trialled in 750 sailors returning from overseas deployments. (Cogito has also fed the technology into an AI coaching product to help call centre staff be more empathic, which it sells commercially.) Installed as an app on a participant’s phone, the tool passively looks for signs of collapses in social interactions and indications that activities are being avoided by examining changes in text and call patterns and mobility data. It also looks for signals of depressed mood by analysing not what is said but the way participants speak in short voice diaries they record. About 200 different signals from the voice, from energy to pauses to intonation, are analysed, says Skyler Place, chief behavioural science officer for Cogito, adding that an overall “risk score” is then sent to the person’s clinician to aid diagnosis and support.

Yet while there is a lot of promise, the science of digital phenotyping has a long way to go; there are questions about privacy and whether it is a technology that will best serve society.

First, much work is required to prove that meaningful medical information can be derived. Many of the published academic studies have been very small pilot studies. Ghosh’s epilepsy study, for example, involved only eight people. The only scientific work Mindstrong’s website cites to support its product is a single study of 27 people. If the algorithms are to be used for medical purposes, studies will need to involve many thousands, says Christensen. And pilots are now giving way to some larger studies. The current trial of the Cogito Companion builds on a smaller proof-of-concept study.

Some research is beginning to include healthy people too (the pilot studies often include only those who already have certain conditions). The BiAffect study, a research effort focused on keystroke behaviour to predict bipolar episodes run by researchers at the University of Illinois at Chicago, has an open science component that allows the public to download an app and take part so that differences between healthy adults and those with bipolar disorder can be better understood. It has about 2,000 participants.

Apple’s interest in smartphone diagnosis appears to stem from previously announced research collaborations to study digital biomarkers of depression and anxiety with the University of California, Los Angeles (UCLA) and mild cognitive impairment with pharmaceutical giant Biogen (which also has a controversial new drug to treat it). Both are gathering a wide range of data from participants’ iPhones and Apple Watches and combining them with survey responses and cognitive tests. The UCLA study started with a pilot phase of 150 people and is due to continue with a main phase tracking 3,000 participants, which will also include healthy people. The Biogen study, which began in September, plans to enrol a mix of participants – 23,000 in total – and includes recruitment from the general population.

The primary scientific challenge for the field, experts say, is that the data is so noisy. People use their phones so differently, it can be hard to compare behaviour between individuals or even in the same person over time. Links between online and offline behaviour can also be unclear. As Brit Davidson points out, a sudden drop in smartphone-based communication could be a sign of social withdrawal, or it might mean someone is communicating face-to-face instead.

There is also the potential for bias in algorithms – well documented in other AI-based technology – that can mean certain groups of people are affected in negative ways or don’t end up benefiting from the technology. A lot of health-related research tends to use white, more affluent and educated populations, notes Stanford’s Martinez-Martin. “How it transfers is a big question,” she says.

Privacy issues also loom. While data gathered for academic research studies follows strict protocols, things can get murkier with data gathered by private companies. Data that includes predictive inferences made with digital phenotyping approaches can be shared by companies in ways that not everyone is aware of but that could have impacts, says Martinez-Martin. “These inferences could be of interest to employers, insurers or education providers and they could use them in ways that are detrimental,” she says. And just because data is “deidentified” – made anonymous – doesn’t mean it can’t be reidentified in some way.

And while there is protection under US law for sensitive health information, it generally relates to that collected in healthcare systems only – not by tech companies. In any case, it is unclear that established definitions of sensitive health information include the kind of information that digital phenotyping strives to collect. “The old system of protecting what we thought of as sensitive data is not really appropriate for this new digital world,” says Martinez-Martin.

There is also the possibility that digital phenotyping will disrupt or compete with doctors.

What if an algorithm’s assessment comes to be viewed by doctors or patients as more objective? What happens if a tool’s recommendations differ from a physician’s? Technology does have a place within mental health services and using software to help spot signs of mental health problems is interesting, says Rosie Weatherley, information content manager at the mental health charity Mind. “[But] human interaction and professional clinical judgment are not replaceable and should remain an essential component of a patient’s experience of diagnosis, accessing treatment and support.”

Lisa Cosgrove, a professor of counselling psychology at the University of Massachusetts, Boston who studies social justice issues in psychiatry, raises a more philosophical issue. Digital phenotyping’s intense focus on the individual deflects attention away from what can be the upstream socio-political causes of mental health issues such as job loss, eviction or discrimination. “Certainly there’s a place for individual care… but digital phenotyping misses the context in which people experience emotional distress,” she says.

Ghosh, however, is hopeful that the field can be beneficial to society. To have the data available for research is such a new phenomenon; time and effort are needed to study it. “We need to make sure we are actually helping people and not disturbing their lives,” he says.

Comments (…)

Sign in or create your Guardian account to join the discussion