In an age of austerity, and a climate of fear about child abuse, perhaps it is unsurprising that social workers have turned to new technology for help.

Local authorities – which face spiralling demand and an £800m funding shortfall – are beginning to ask whether big data could help to identify vulnerable children.

Could a computer program flag a problem family, identify a potential victim and prevent another Baby P or Victoria Climbié?

Years ago, such questions would have been the stuff of science fiction; now they are the stuff of science fact.

Bristol is one place experimenting with these new capabilities, and grappling with the moral and ethical questions that come with them.

Gary Davies, who oversees the council’s predictive system, can see the advantages.

He argues that it does not mean taking humans out of the decision-making process; rather it means using data to stop humans making mistakes.

“It’s not saying you will be sexually exploited, or you will go missing,” says Davies. “It’s demonstrating the behavioural characteristics of being exploited or going missing. It’s flagging up that risk and vulnerability.”

Such techniques have worked in other areas for years. Machine learning systems built to mine massive amounts of personal data have long been used to predict customer behaviour in the private sector.

Computer programs assess how likely we are to default on a loan, or how much risk we pose to an insurance provider.

Designers of a predictive model have to identify an “outcome variable”, which indicates the presence of the factor they are trying to predict.

For child safeguarding, that might be a child entering the care system.

They then attempt to identify characteristics commonly found in children who enter the care system. Once these have been identified, the model can be run against large datasets to find other individuals who share the same characteristics.

The Guardian obtained details of all predictive indicators considered for inclusion in Thurrock council’s child safeguarding system. They include history of domestic abuse, youth offending and truancy.

More surprising indicators such as rent arrears and health data were initially considered but excluded from the final model. In the case of both Thurrock, a council in Essex, and the London borough of Hackney, families can be flagged to social workers as potential candidates for the Troubled Families programme. Through this scheme councils receive grants from central government for helping households with long-term difficulties such as unemployment.

Such systems inevitably raise privacy concerns. Wajid Shafiq, the chief executive of Xantura, the company providing predictive analytics work to both Thurrock and Hackney, insists that there is a balance to be struck between privacy rights and the use of technology to deliver a public good.

“The thing for me is: can we get to a point where we’ve got a system that gets that balance right between protecting the vulnerable and protecting the rights of the many?” said Shafiq. “It must be possible to do that, because if we can’t we’re letting down people who are vulnerable.”

Automated profiling raises difficult questions about the legal basis on which it is carried out. Councils do not seek explicit consent for this kind of data processing, instead relying on legal gateways such as the Children’s Act.

“A council will say it has processed personal data in this way because it has a legal obligation to protect the welfare of children. However, this doesn’t give them a blanket opt-out of all data protection provisions,” said Michael Veale, a researcher in public sector machine learning at University College London.

“For ‘child abuse data’ the law allows them to refuse to provide information about specific cases, but they still need to give certain information proactively in advance, such as the general way that their systems work.”

There is no national oversight of predictive analytics systems by central government, resulting in vastly different approaches to transparency by different authorities. Thurrock council published a privacy impact assessment on its website, though it is not clear whether this has raised any real awareness of the scheme among residents.

Hackney council has also published a privacy notice in the past. However, a separate privacy impact assessment, released in response to a freedom of information request earlier this year, stated: “Data subjects will not be informed, informing the data subjects would be likely to prejudice the interventions this project is designed to identify.” Hackney refused to tell the Guardian what datasets it was using for its predictive model.

By contrast, in an Essex county council predictive scheme to identify children who would not be “school ready” by their fifth birthday, the anonymised data is aggregated to a community level and shared with parents and services who are part of the project’s delivery group. These community participants help decide how funding should be allocated or working practices changed on the basis of data findings.

Shafiq said all council data processed by his company was only used either with consent or under statutory legislation. “When that referral comes into the front door there’s legislation that allows the data to be shared,” he said. “You don’t get to do Cambridge Analytica-type things in the public sector. It doesn’t work.”

But he acknowledged that advocates of predictive analytics need to do more to raise public awareness. “There needs to be more done around engagement and educating people about what we’re using it for,” he said.

“There needs to be more informed discussion with the public around agreeing what is appropriate and proportionate.”

Another criticism of predictive analytics is that there is a risk of oversampling underprivileged groups, because a council’s social services department will inevitably hold more data on poor families than it does on wealthy ones.

“If you only have data for families relying on council resources, like public housing, then the model doesn’t have all the information it needs to make accurate predictions,” said Virginia Eubanks, author of a book called Automating Inequality.

“If there are holes in the system or you’re over-collecting data on one group of people, and none at all on others, then it will not only mirror inequalities, but amplify them.”

Veale used the example of private versus public schools as a case where children at risk might not be identified. “Children in independent schools might never pass a certain level of ‘risk’ within a model, but this doesn’t mean that there’s not any kids at risk of child abuse in private schools. You’ll just disproportionately miss them with systems trained on public sector datasets,” he said.

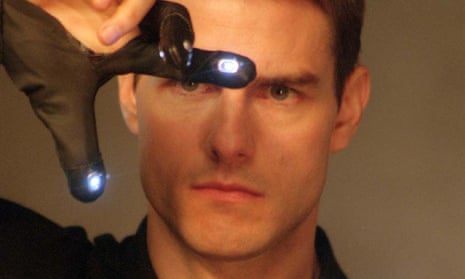

Technology to intervene before crime can happen inevitably draws comparisons to the science-fiction film Minority Report, in which detectives use intelligence gathered from the dreams of psychics to arrest people for crimes before they commit them. “I’ve heard that Minority Report reference I can’t tell you how many times,” said Shafiq, who cringes at the comparison. “We haven’t got someone asleep, dreaming, there’s slightly more to it than that.”

But the reference raises important questions about software accuracy. Predictive analytics systems in the real world are designed by humans, and risk incorporating and replicating all of their potential assumptions and mistakes. Both Hackney and Thurrock claim the accuracy rate of their software is around 80%.

“Child abuse is an ethical quagmire – you’re damned if you do, and you’re damned if you don’t,” said Veale. “When you predict events like these, you tend to get false positives. If you want to make sure you don’t miss children who are at risk, you’re going to play it safe and cast your net widely.”