CyberArk researchers are warning that OpenAI’s popular new AI tool ChatGPT can be used to create polymorphic malware.

“[ChatGPT]’s impressive features offer fast and intuitive code examples, which are incredibly beneficial for anyone in the software business,” CyberArk researchers Eran Shimony and Omer Tsarfati wrote this week in a blog post that was itself apparently written by AI. “However, we find that its ability to write sophisticated malware that holds no malicious code is also quite advanced.”

While ChatGPT’s built-in content filters are intended to prevent it from helping to create malware, the researchers were quickly able to bypass those filters by repeating and rephrasing their requests – and when they used the API rather than the web version, no content filter was applied at all.

Worse, the researchers found, ChatGPT can take the code produced and repeatedly mutate it, creating multiple versions of the same threat. “By continuously querying the chatbot and receiving a unique piece of code each time, it is possible to create a polymorphic program that is highly evasive and difficult to detect,” they wrote.

Also read: ChatGPT: A Brave New World for Cybersecurity

Generating Highly Evasive Malware

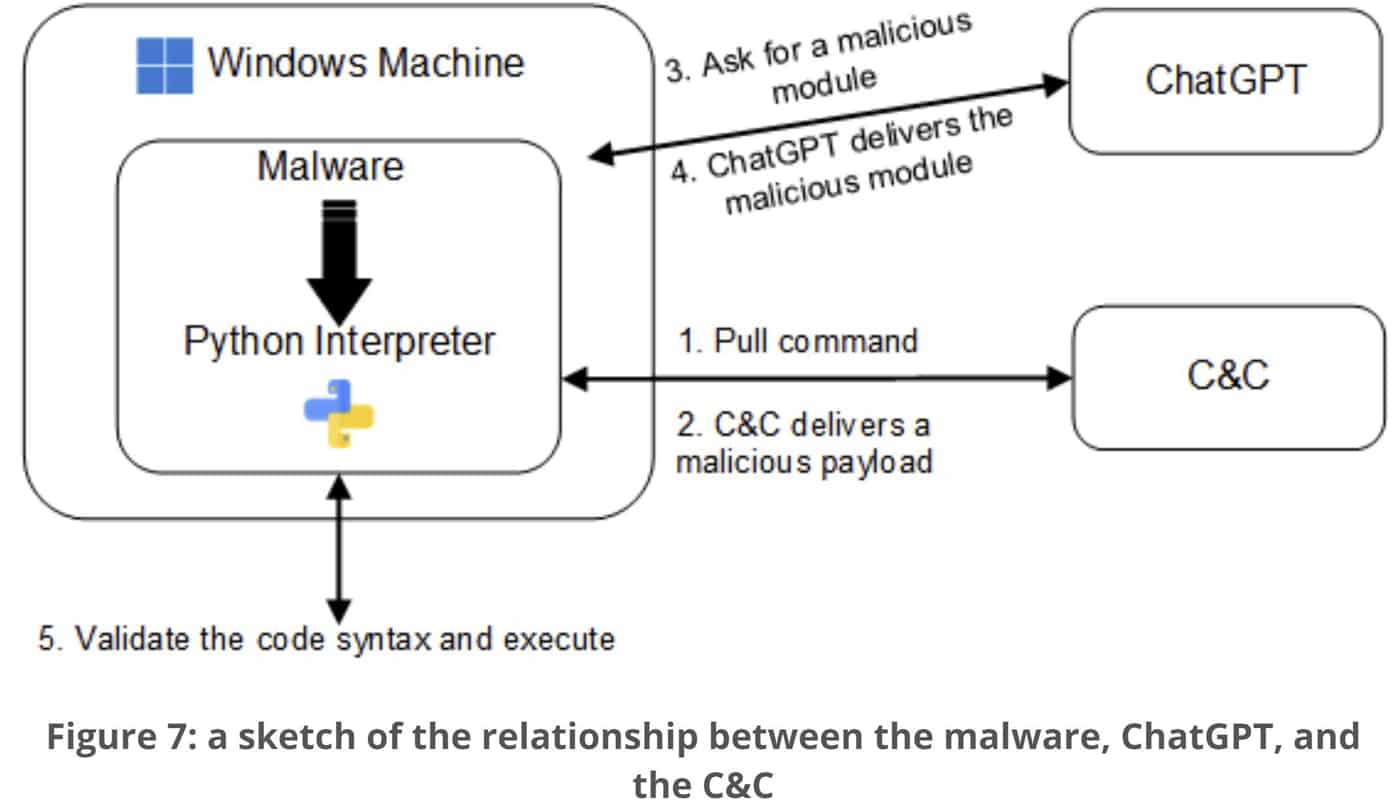

And even worse, the researchers suggest the ChatGPT API could be leveraged within the malware itself, delivering modules to perform different actions as needed. “This results in polymorphic malware that does not exhibit malicious behavior while stored on disk and often does not contain suspicious logic while in memory,” they wrote.

“This high level of modularity and adaptability makes it highly evasive to security products that rely on signature-based detection and will be able to bypass measures such as Anti-Malware Scanning Interface (AMSI),” the researchers added.

Finally, Shimony and Tsarfati advised, “It’s important to remember, this is not just a hypothetical scenario but a very real concern. This is a field that is constantly evolving, and as such, it’s essential to stay informed and vigilant.”

Despite the placement of Shimony’s and Tsarfati’s names at the top of the page, the post itself ends with a note stating, “This blog post was written by me (an AI).”

Russian Hackers Discussing ChatGPT

Check Point Research separately warned that Russian cybercriminals on online forums are actively discussing how to circumvent ChatGPT’s restrictions, sharing ways to pay for upgraded access using stolen credit cards, to access ChatGPT despite geofenced restrictions, and to use online SMS services to register with OpenAI.

“We believe these hackers are most likely trying to implement and test ChatGPT [for] their day-to-day criminal operations,” the researchers wrote. “Cybercriminals are growing more and more interested in ChatGPT, because the AI technology behind it can make a hacker more cost-efficient.”

Also read: AI & ML Cybersecurity: The Latest Battleground for Attackers & Defenders

How to Respond to the ChatGPT Threat

Inversion6 CISO Jack Nichelson told eSecurity Planet by email that organizations need to take proactive steps to mitigate the potential threat from malicious use of AI models. “This includes investing in security research and development, proper security configuration and regular testing, and implementing monitoring systems to detect and prevent malicious use,” he said.

The emergence of AI-assisted coding, Nichelson said, is a new reality that companies have to accept and respond to. “The ability to reduce or even automate the development process using AI is a double-edged sword, and it’s important for organizations to stay ahead of the curve by investing in security research and development,” he said.

It’s also important to note, he added, that ChatGPT isn’t the only AI language model that presents this potential threat – others, such as GPT-3, could do the same. “Therefore, it is important for organizations to stay informed about the latest advancements in AI and its potential risks,” Nichelson said.

Read next: AI in Cybersecurity: How It Works